DOCUMENTATION OF ARTWORKS

& PROCESSES

In the following section I will continue developing previous concepts as well as talking about the polarized piece I made for unit two, and the reasons behind why I decided to make other four smaller ones. A big change was to transition the initial image making process from analogue photography to Artificial Intelligence (AI) generated images. This is because I was interested in incorporating my research on speculative image making to the final pieces.

The generation of these images begins with the intent of doing so. There is a space for conceptualizing and thinking about what the piece is going to do, and how it is supposed to unfold. What are the potential effects and impact? I try to visualize different versions, and pick, to later unpick them as the processes gets more and more complex.

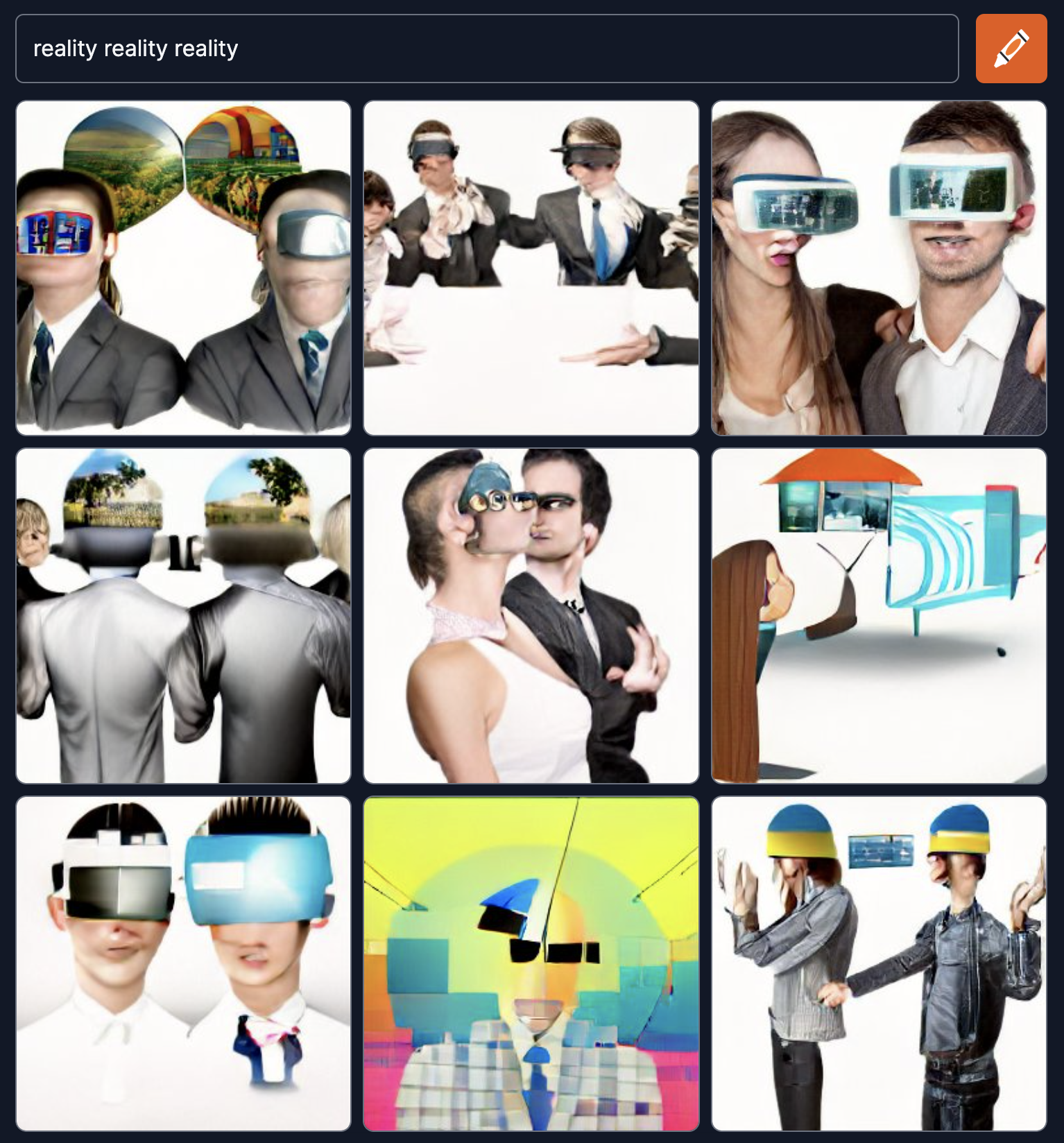

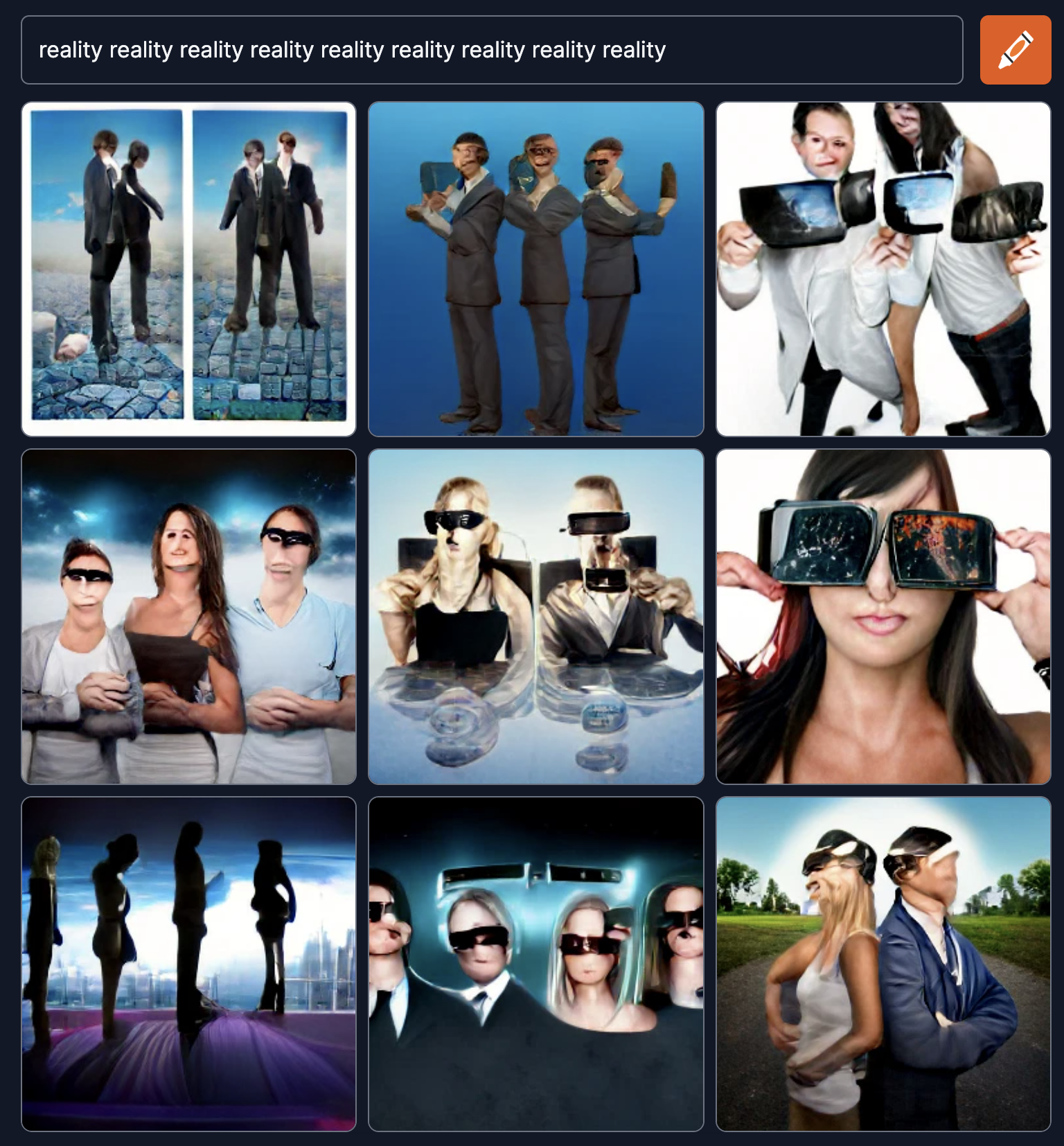

In relation to Unit 2, I decided to use AI, as for me it shows an interconnection between the past (the image library), the present (the image), and the future (the speculative). I also believe in symbology to address universality. The first part of the processes is choosing the adequate AI software as well as what the search input will be.

I chose the word “reality” for the search. The processor I used is called Dall-e mini. What interested me about this platform was that is was a free prototype which contained the following disclaimer:

“Bias and Limitations: While the capabilities of image generation models are impressive, they may also reinforce or exacerbate societal biases. While the extent and nature of the biases of the DALL·E mini model have yet to be fully documented, given the fact that the model was trained on unfiltered data from the Internet, it may generate images that contain stereotypes against minority groups. Work to analyze the nature and extent of these limitations is ongoing, and will be documented in more detail in the DALL·E mini model card.”

(Dall.e, 2022)

Another interesting disclaimer that page contained, was in the Model Card, which warned about the misuse of this technology:

“Misuse and Malicious Use:

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes:

· Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

· Intentionally promoting or propagating discriminatory content or harmful stereotypes.

· Impersonating individuals without their consent.

· Sexual content without consent of the people who might see it.

· Mis- and disinformation

[…].” (Dall.e, 2022)

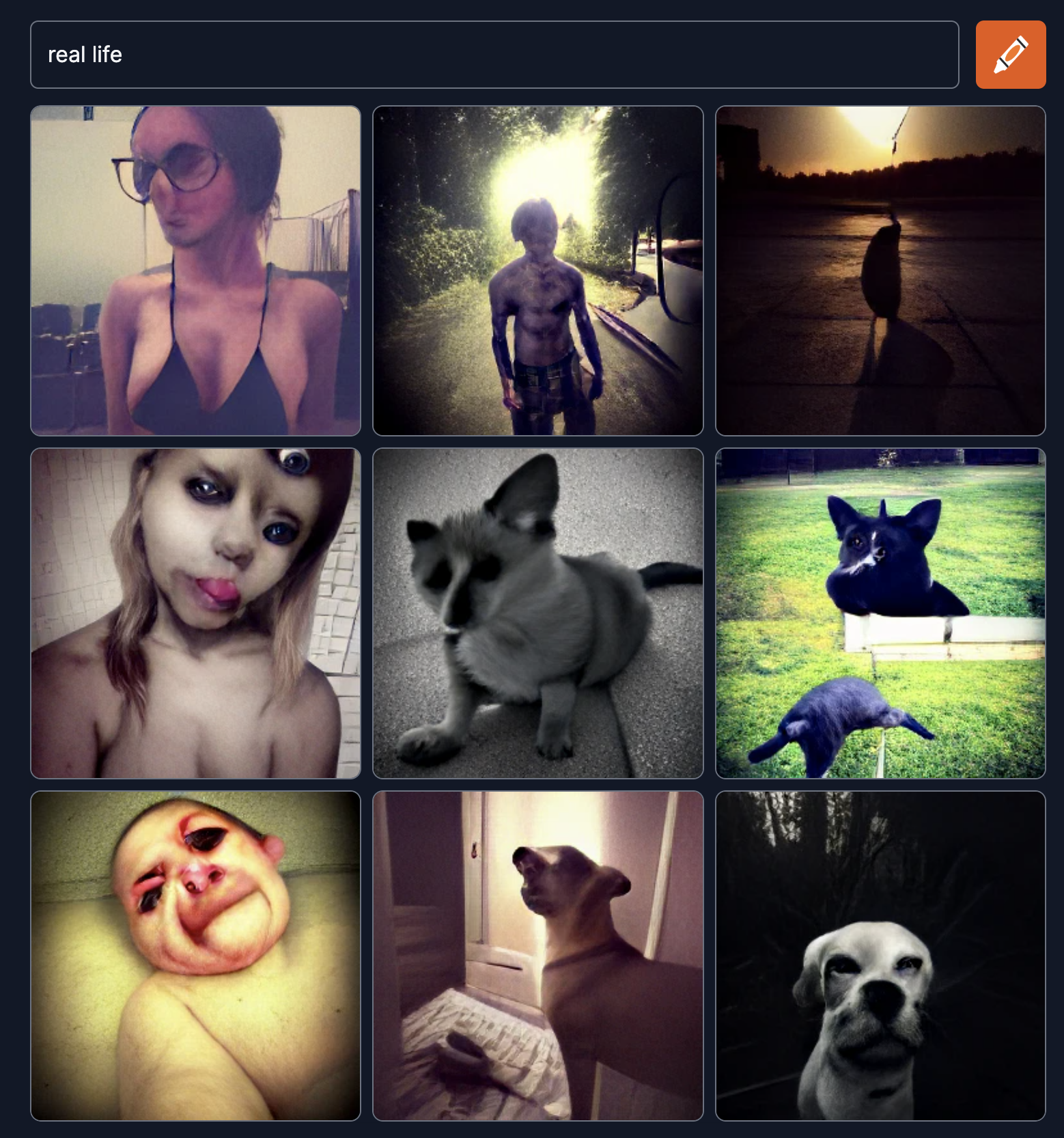

In a way, it meant that people placed in common or grupal consciousness were more vulnerable to be altered with as there was more information uploaded about them. I was immediately interested in AI’s potential as a visual average of humankind’s media content database. By understanding AI’s behavior and visual production, we can learn from ourselves. I ran multiple searches until I was satisfied with the images results. The image selection also depended on how well the 3D print mold would respond to the vacuum form process.

After the AI images are saved, there is an extra step that uses AI to enhance the original image. This way, another software interprets how the small image would be completed on a bigger scale. This seemingly innocuous process shows two different algorithmic tools (one with a library and the other focused on intelligent recognition) work together to create the most high-quality version recognizable in the entire progress. (Dall-e, 2022)

From here, the image distortion process and the idea of deconstructing the image starts. One example is the image exhibited in the middle on a larger scale at Dilston Gallery. The original size of the files was 14KB, and after the enhancement process it was 72KB. This process can be repeated multiple times running different AI enhancement softwares. The image upscaler I used is called Zyro. (Zyro, 2019). You can zoom in on the following images to identify the quality difference amongst them, and how the software softens and enhances the image:

So, having the pixel as a starting point to set quality, I process the image in different ways to get rid of its traces. I use a preexisting input of stock images, although every software has its own libraries to access previously generated visual media. The program uses linguistic tagging as a way of categorizing information that will later be averaged into a singular composition.

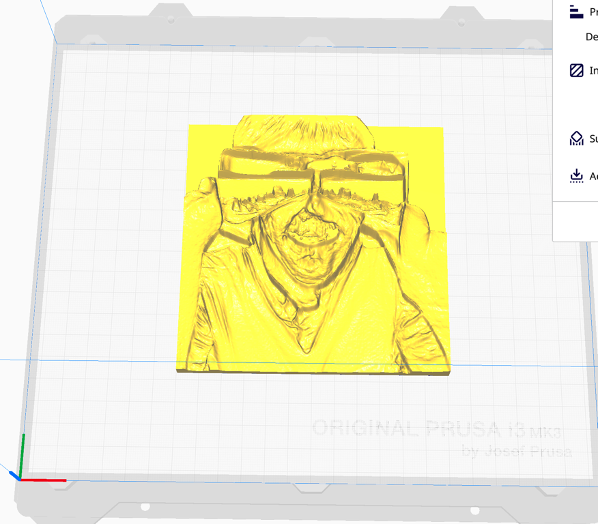

As I showed, word choice is an essential part of the process as it will be the general guideline. After the image is AI generated and enhanced, I prepare it for the 2D to 3D digital transfer. I reshape the squared images as circles, so they can fit the circular design of the final display. Showing the original version would mean having a square inside a circle, which is still an interesting version.

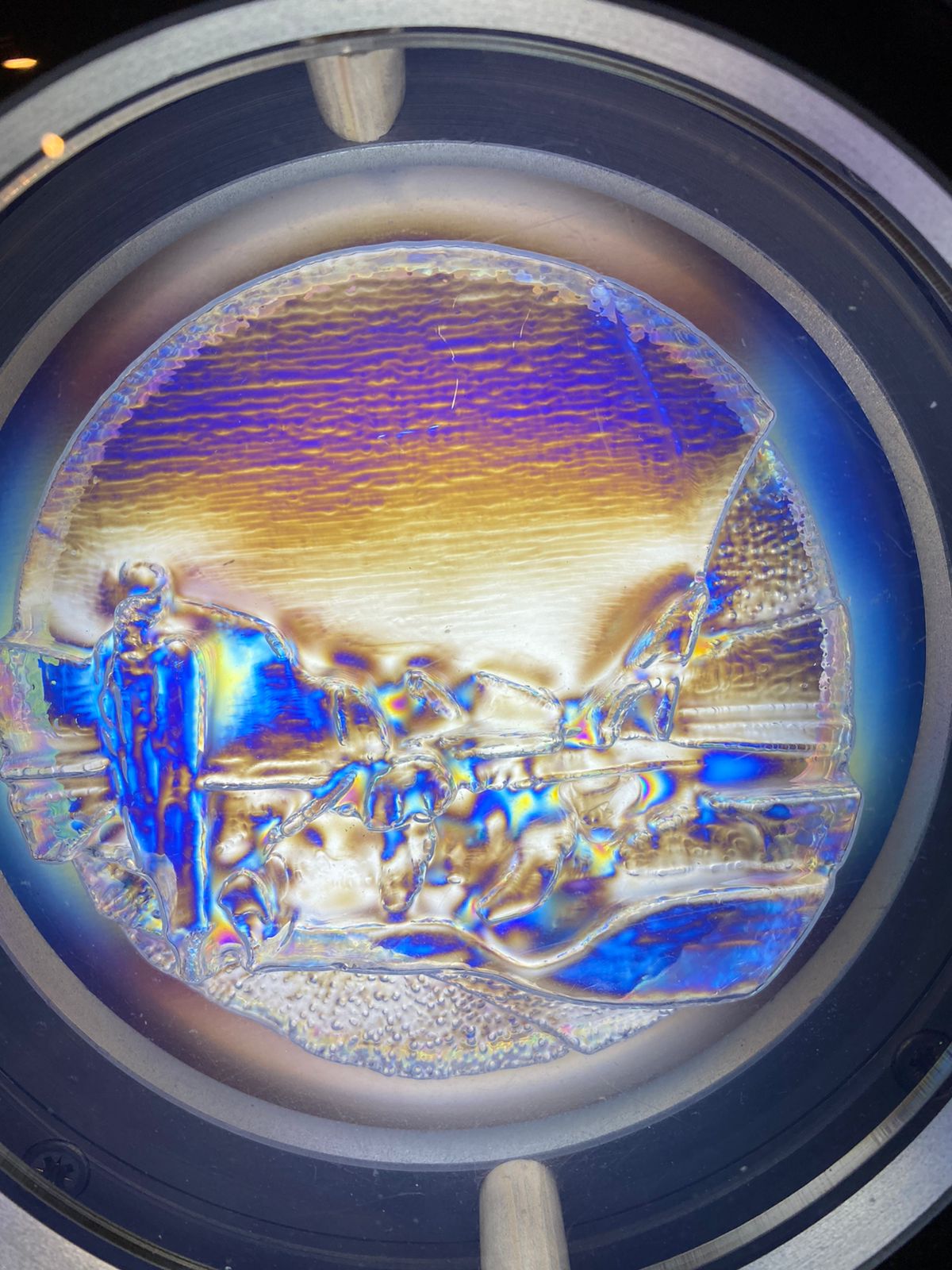

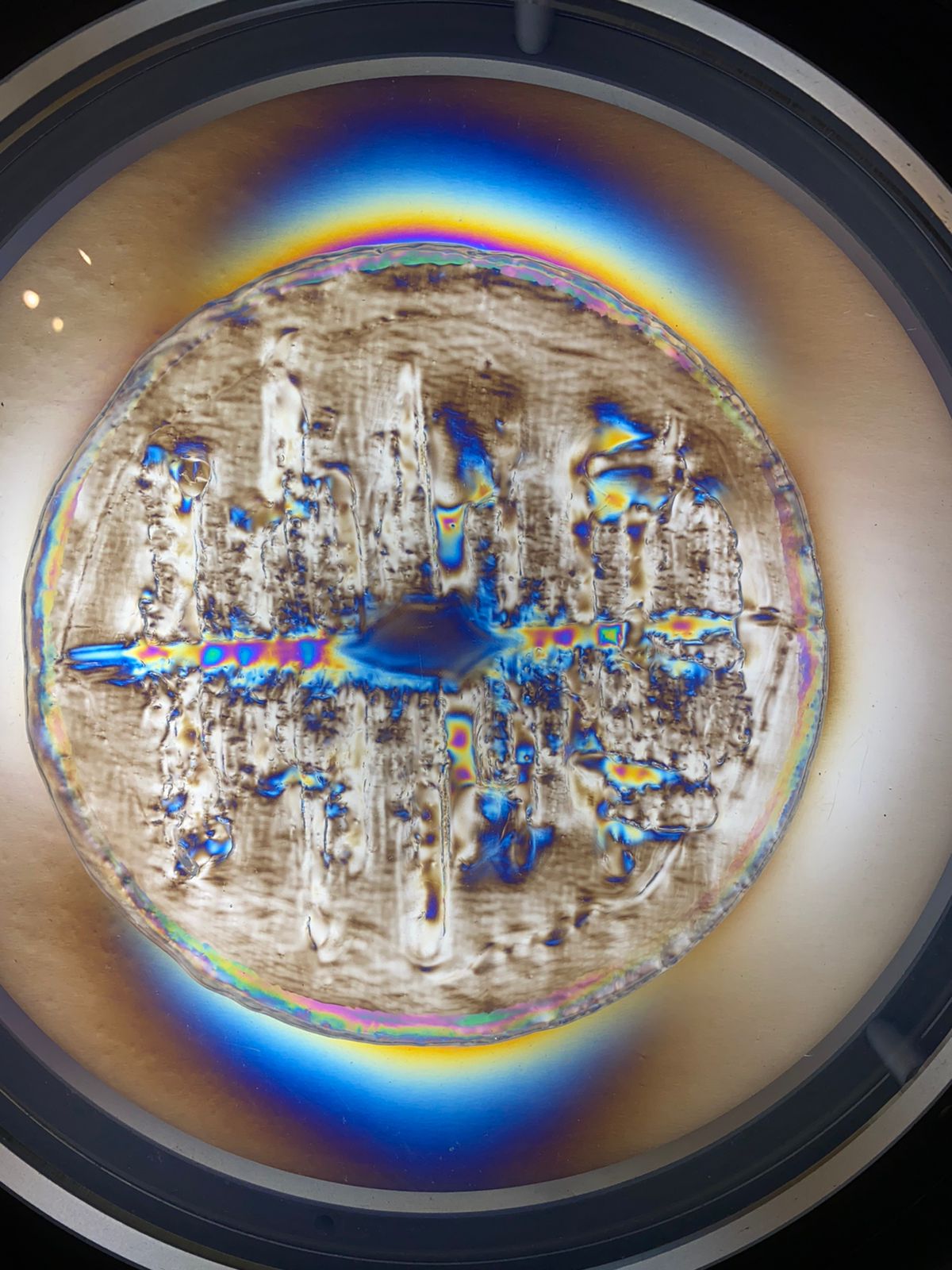

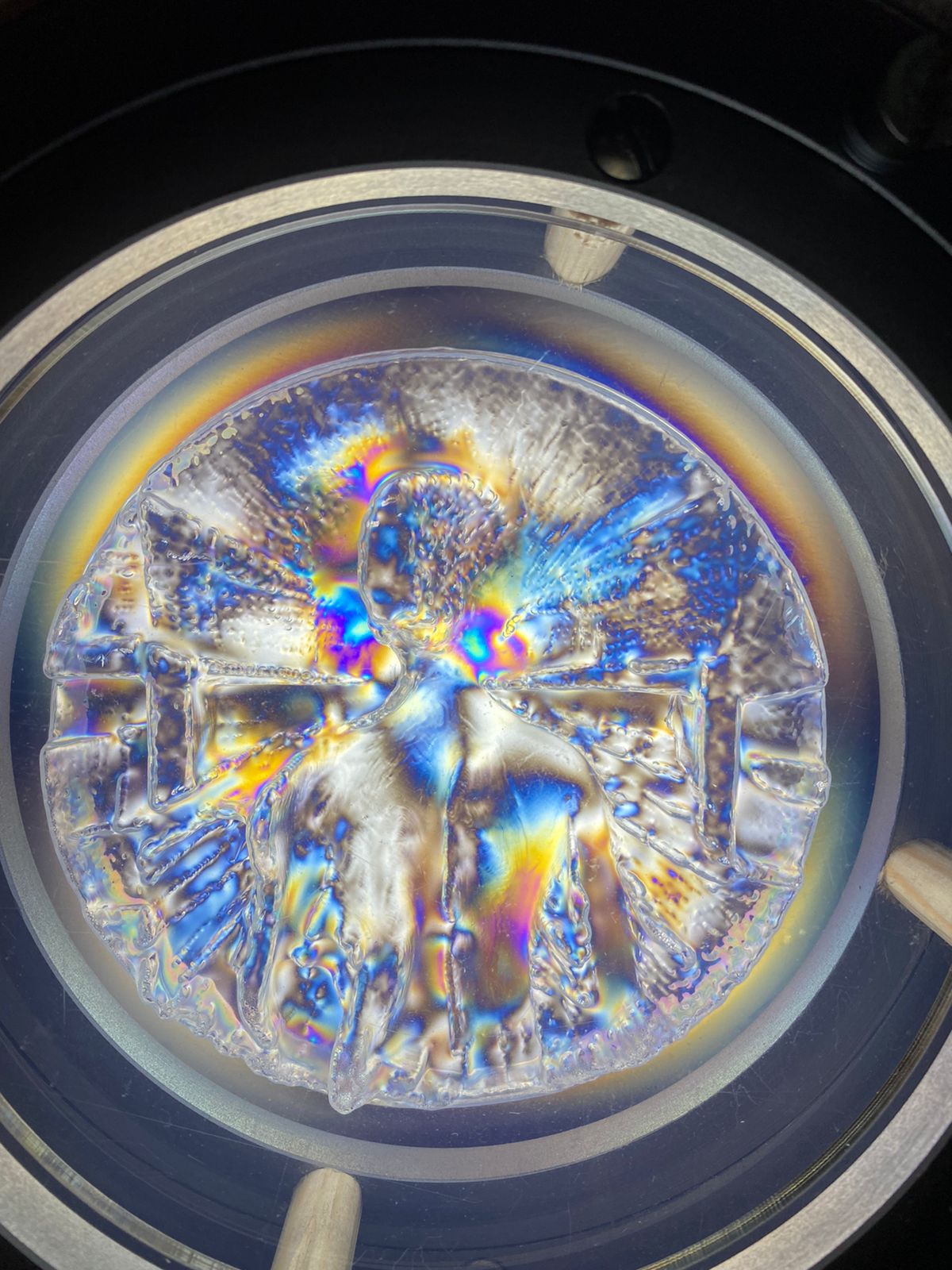

Another important change is editing into greyscale. This way, I ensure the pixels are read as height on a surface. One interesting example is the second piece of the series, where a skyline gradient translates as a horizon that grows in height exponentially. The last print of the series, also shows a character lit from the back, so the perspective lines also grow exponentially as it gets closer to the borders of the image.

Once the image is printed, I use the remaining piece as a mold to later vacuum form. This is the part of the process where I think there is space to do further research to find alternative ways of using leftover or recycled plastic instead of HIPS (High Impact Polystyrene), which is very harmful for the environment, gets scratched easily and is very wasteful.

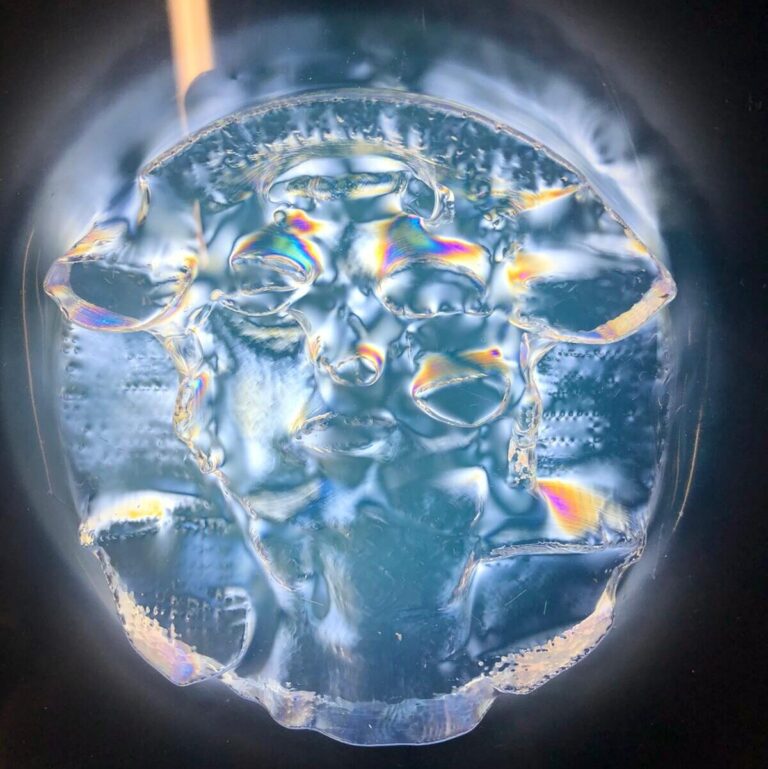

The vacuum forming process transfers the 3D print into a transparent surface. I tried to polarize transparent filament, however due to the amount of accumulated melted material it is too opaque to polarize. Also, an acrylic print is not an option for direct polarization as resin doesn’t get polarized. This printing process however, allows to have more detail, so I could use it for further projects where I want to maximize the quality of the vacuumed formed pieces, especially for reprints, where plastic filament starts losing sharpness after a number of tries.

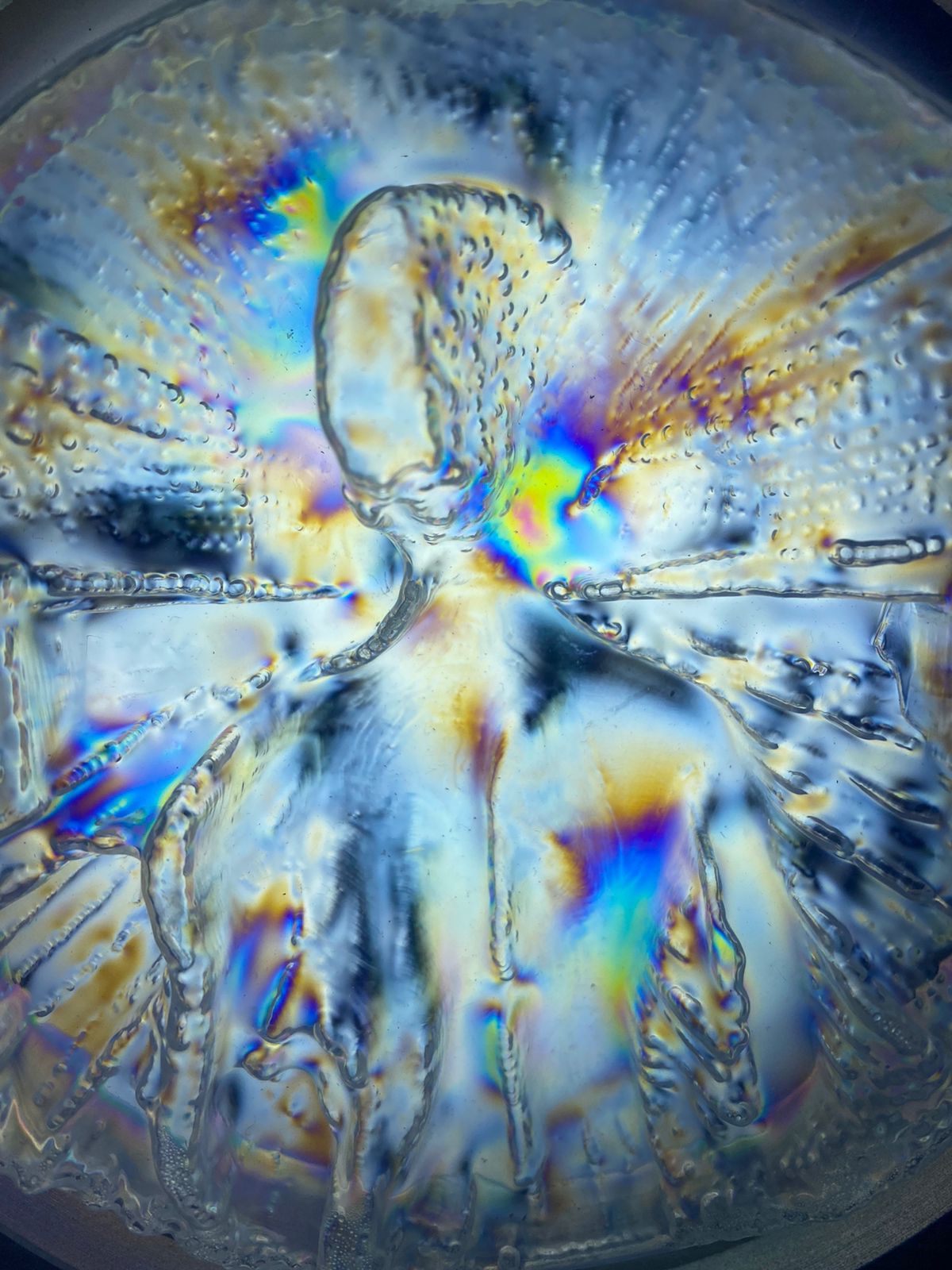

The pixel, however, is fully diluted now. So, there is a sculptural approach to the image from now on. The final goal is to allow light to be the main source to read to image as a gradient map of stressed material exposed to our limited frequencies of seeing. For the main piece in the middle, the print has a mistake, it moved out of place while being printed, so the volume of the piece was not symmetrical and was empty on the sides. I could have left it like that to polarize the printing mistake, however, I used self-hardening clay to fill the gaps. My fingerprints shaping the image are now embedded in the final piece as well.

The pixel is replaced for the trapped air in a heated surface. It is a second print, but an analogue one, it is a transference of information. It reminds me of taking the USB with the sliced final document to the 3D printer. That walk from the computer to the printer is also a step in the preparation of information transferring. All these traces, innocuous steps and information are then contained in the sculptural piece.

There is material exchange that happens when assembling the final print. The piece comes from a place of material exploration, and questioning the limits of where the image is placed and perceived. The vacuumed formed piece exists as a separate piece with the potential to get polarized, but without showing the stress it contains unless submitted to a back-lit linear polarization while rotating.

The sculptural aspect is important because it exists habilitating the sense of touch, in combination to what one is seeing. The audience is using two senses to have a complete approach to the piece. It demands a direct interaction, and then a connection between movement an image shifting. The piece is powered by nervous and electrical connections, which are both needed when its being interacted with.

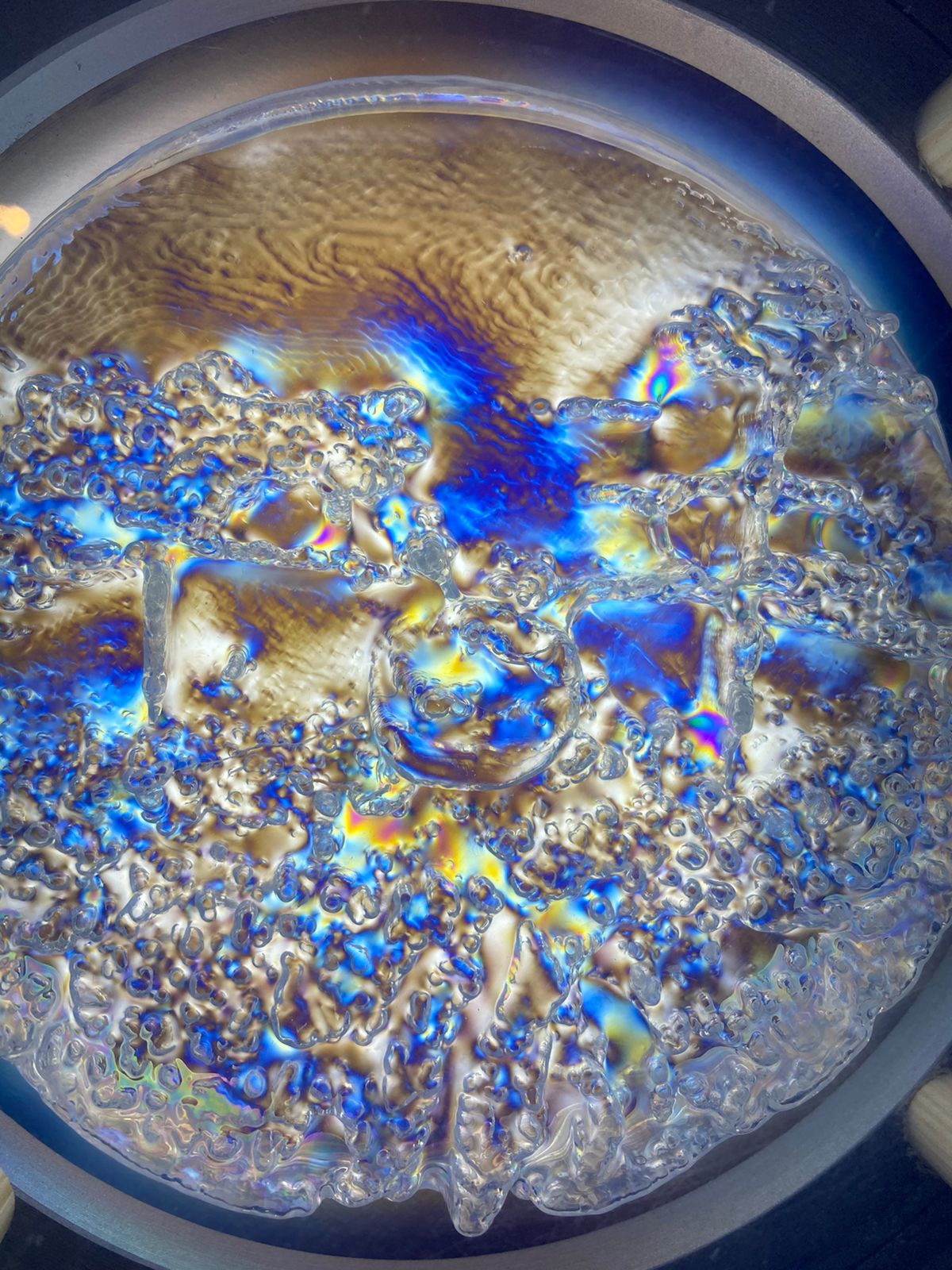

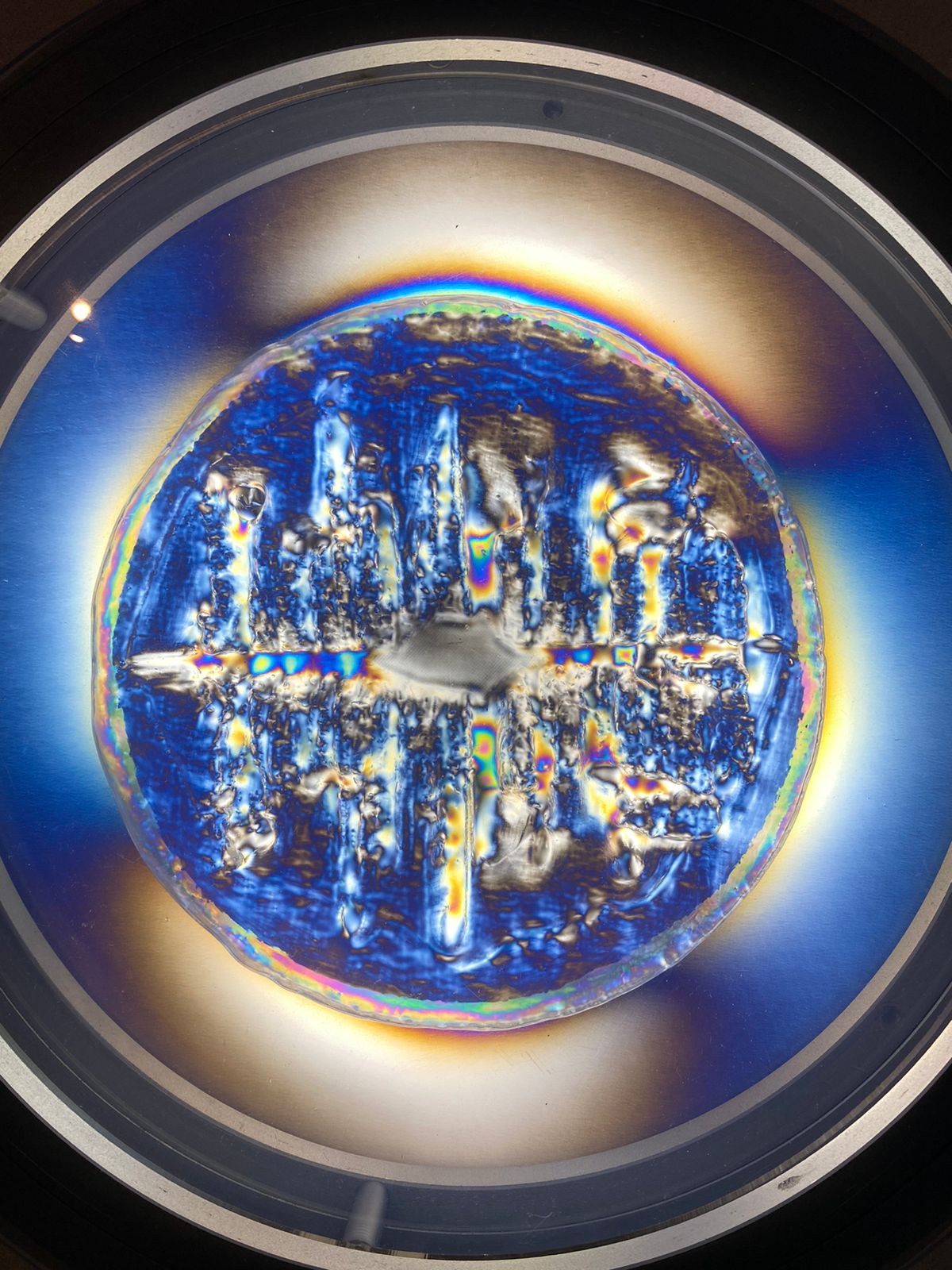

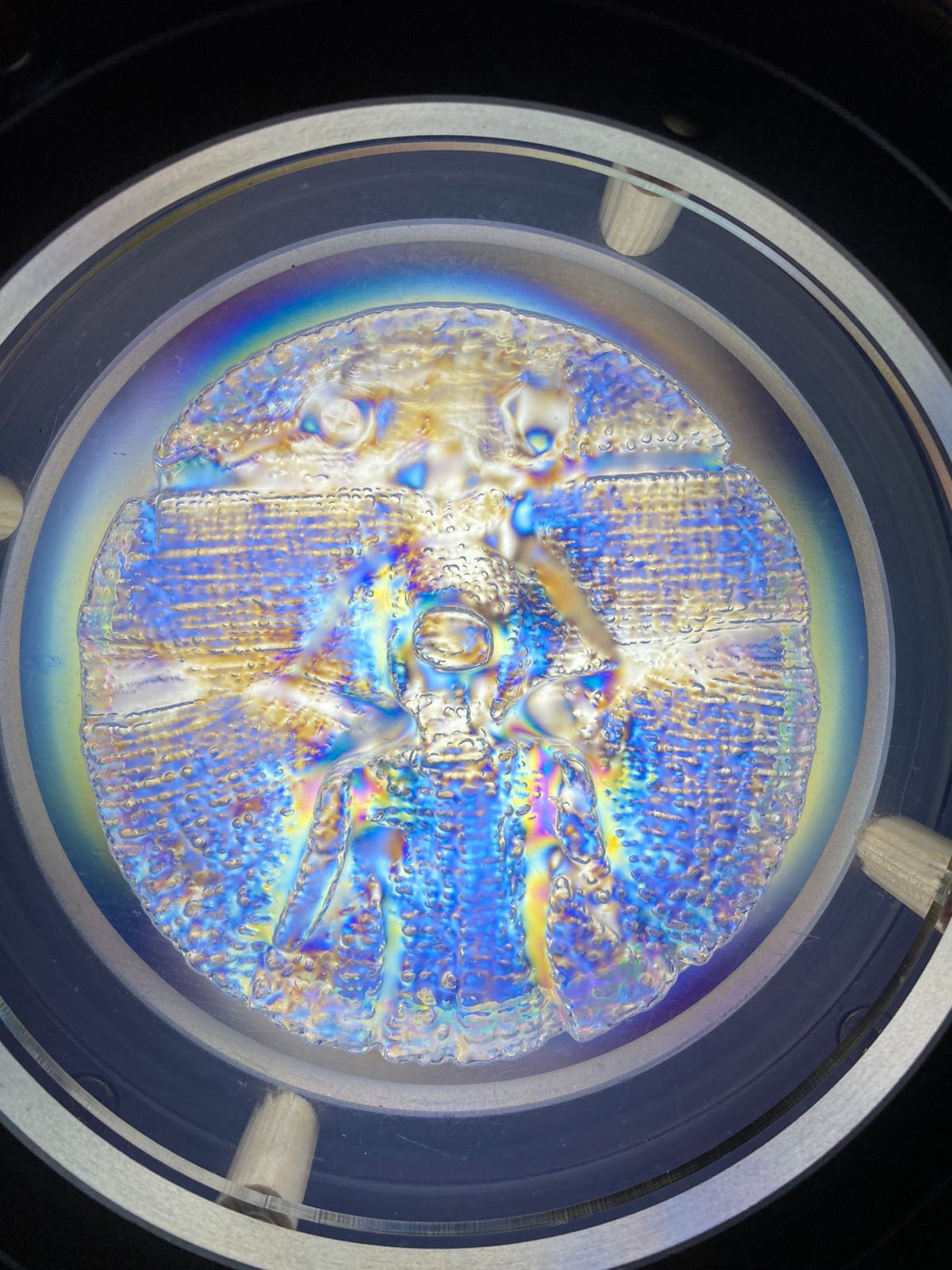

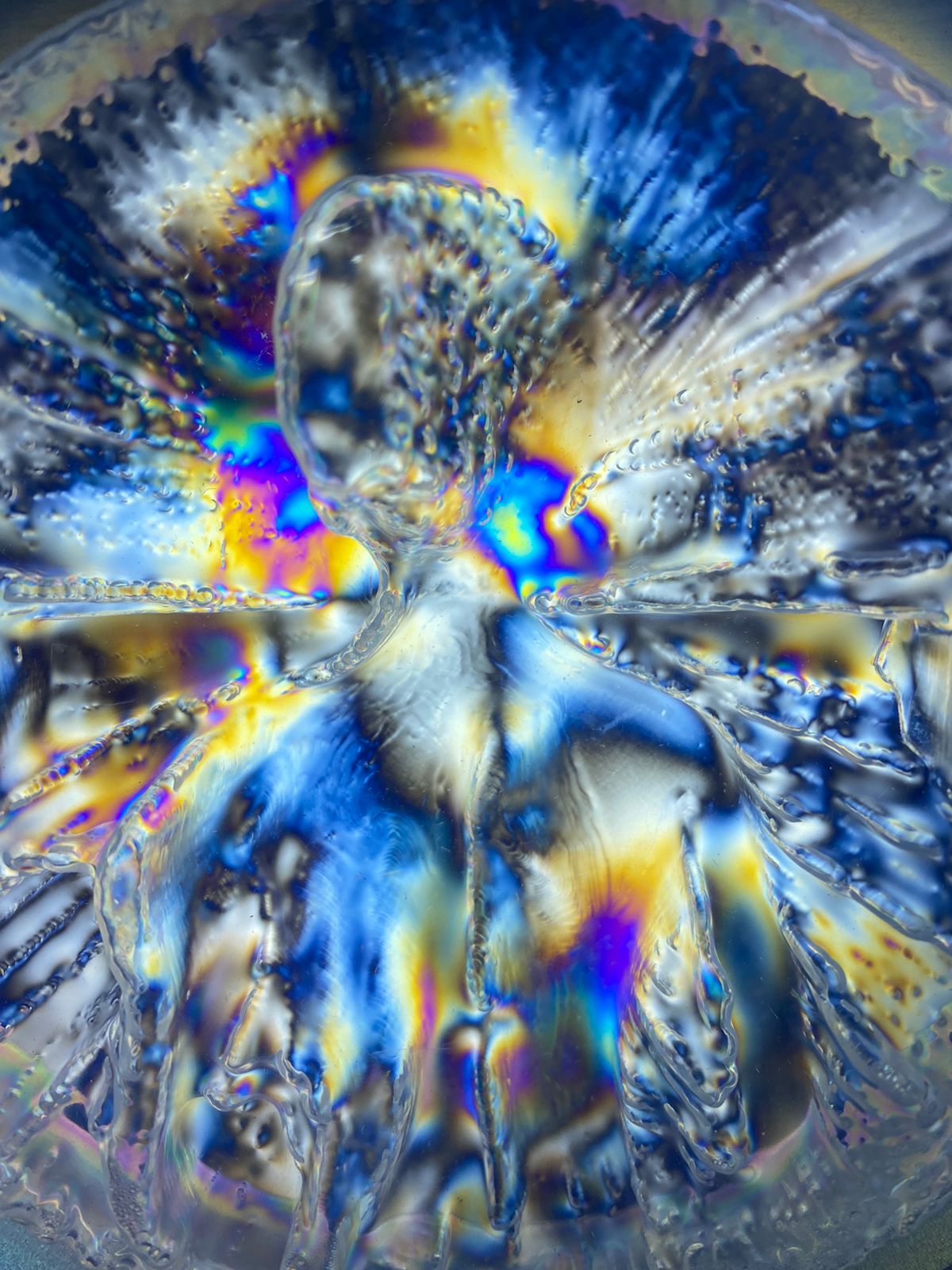

The surface is animated. Volumes and figures emerge out the digital interpretation of darker and lighter areas. Shades allow a space to build exponential altitude. Within the transparencies, colors seem to appear, when we are only, in reality, reaching the limit of our human capacity for color perception through polarizing light.

Are the pieces light sculptures?

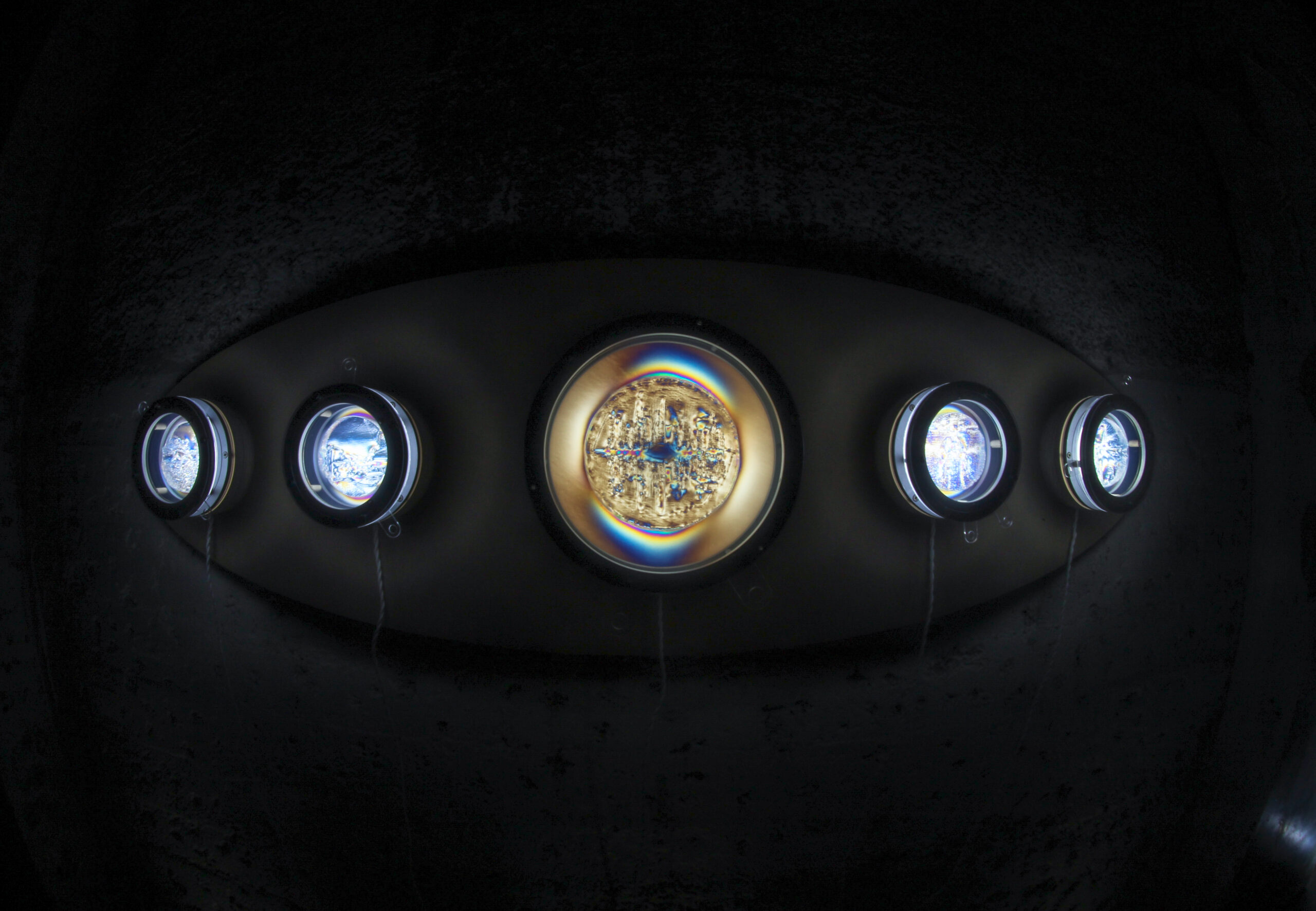

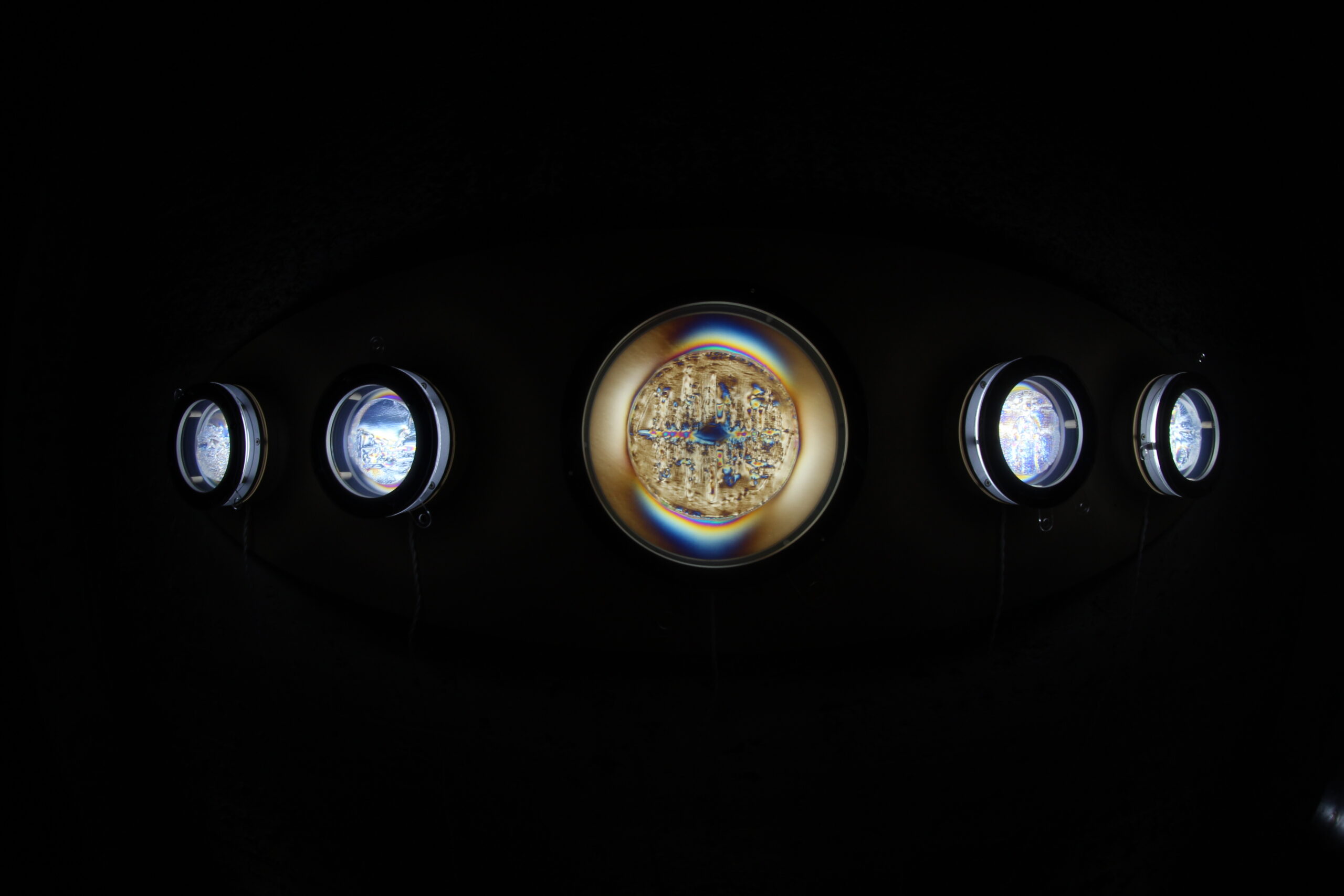

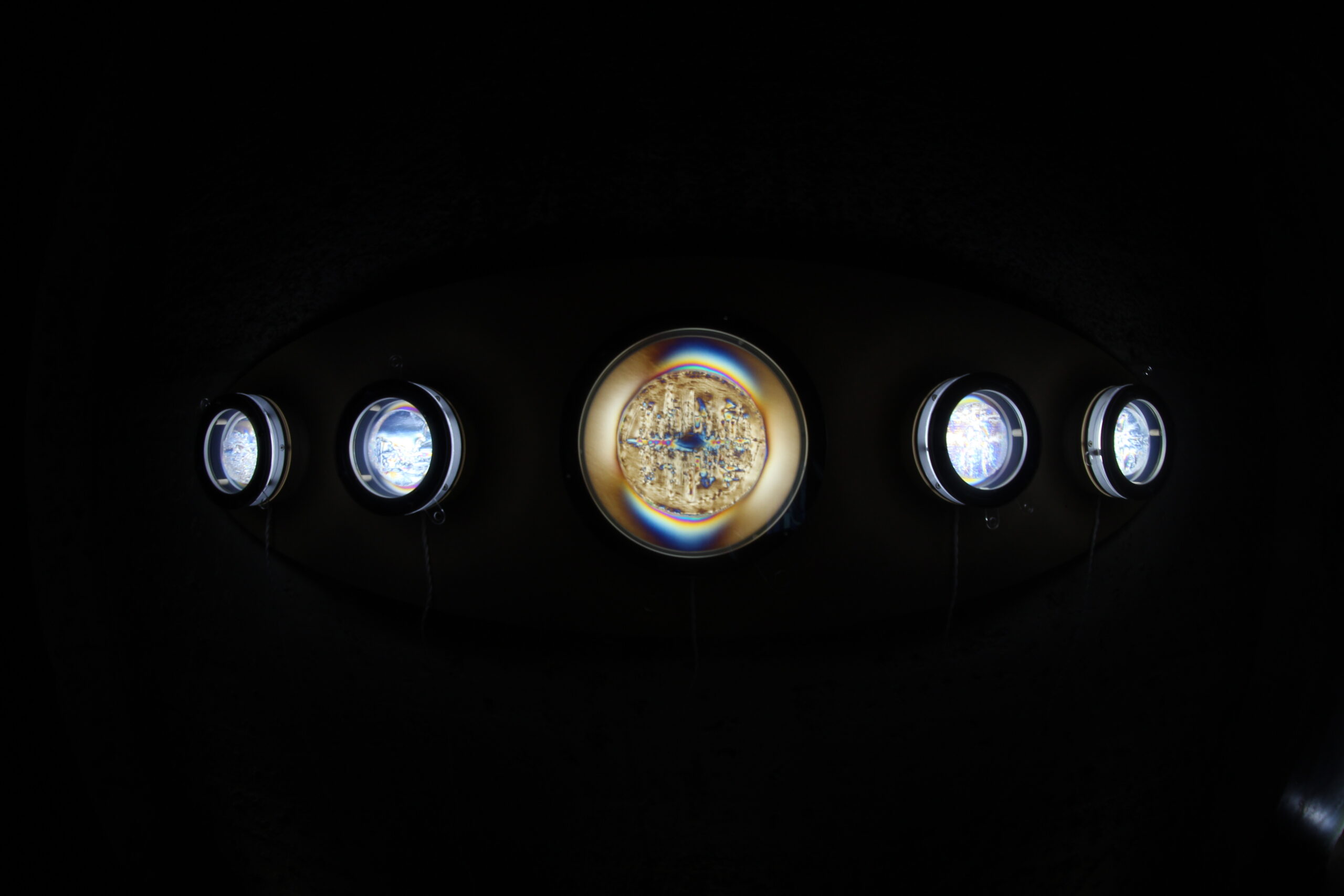

The curators for the Dilston show classified the piece as “Light sculptures”. This inspired the description of the piece: ‘Unknown realities’. It is made up of five light sculptures. Each one has a singular, AI generated, vacuum formed image that gets polarized. The light orientation is manually controlled, which shifts molecular stress, so there is no singular version of what the audience sees. As said, the images are based on AI’s interpretation of the word ‘reality’. Since AI is built with an input of previously generated images, they reflect back on our own constructs.

I am happy with this description; however, a lot of information is being left out.

Through this piece I try to question if we can trust the accuracy of the perceptual skills we are born with and used to. The focus are the uncommon aspects of materiality.

This exploration of material presents the process as levels of understanding. Different levels of reality are presented by contrast. Does knowing it is artificial intelligence have an effect in how the piece is perceived? And if so, what is that effect? Also, how does intersectionality or different cultural backgrounds affect the different interpretations of AI generated images?

These are difficult questions, however trying to understand the possible interpreted outcomes could help answer them. One example, is applying the notion of cultural appropriation when it comes to artificial intelligence, as well. We could argue that the language and codes used are human, while the visual outcomes are not, even when the original inputs they are based on were. So, is AI its own culture?

Artificial intelligence reminds me to the rise of the Vaporwave culture in that they don’t take aspects of one specific cultural background, but rather try to address an aesthetic universality. The difference is that we now have an algorithmic automated process to do so. Cultural appropriation and artificial intelligence as visual outcomes can have further development making use of language, and how we are equipped to understand other systems of communication.

When it comes to the process, however, there are questions that arise unexpectedly. The notion of perception and material imperfections, for example. Materials like HIPS sometimes have scratches, so an immediate redesign and replacement of the piece, if possible, is mandatory. The layer people interact with is an acrylic handle that does not affect the polarization. It does, however, register the fingerprints of the participants. It also gets scratched over time inevitably.

Regarding scratches, I found myself obsessing over them. Over time, I found interesting how I was caring too much about scratches. At the end, the piece and the materials are just a number of surfaces that absorb a history of traces over time.

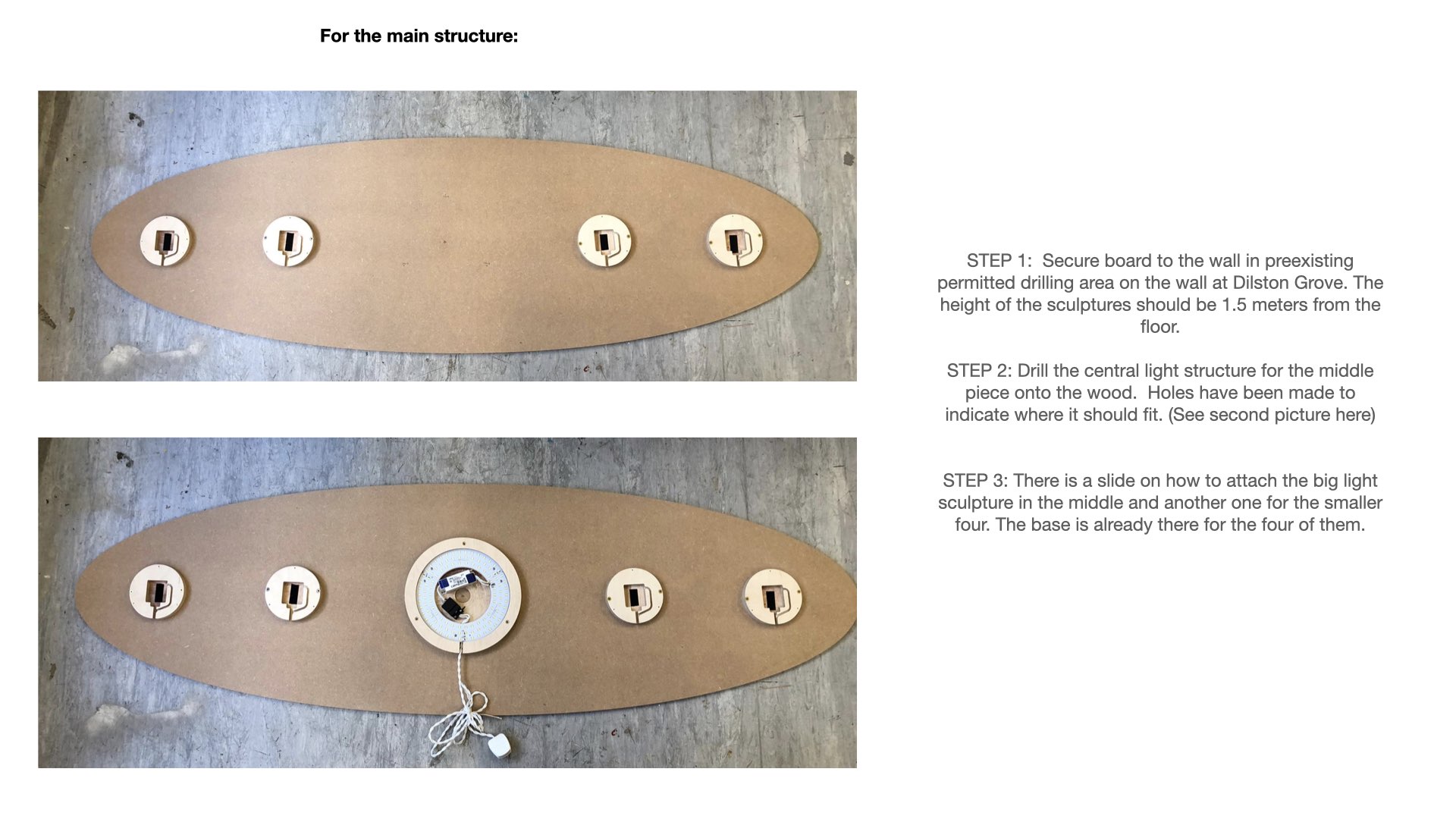

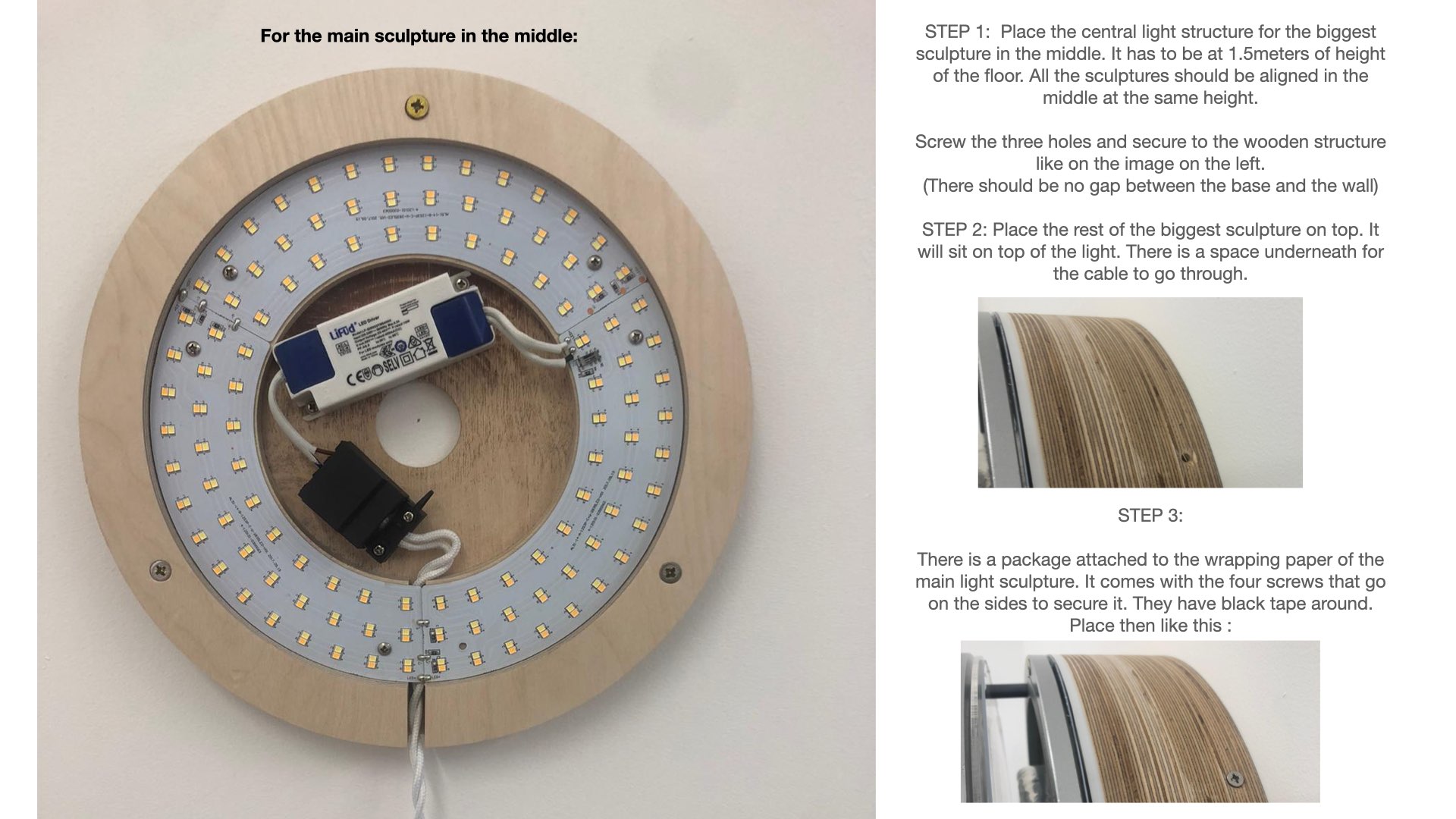

Light, on the other hand, is crucial to identify ourselves and recognize our surroundings, allowing us to place ourselves in a specific time and place. Plastic, on the other hand, is humanmade, unlike the wood washers that hold the two main structural pieces together to allow the polarizers rotation and to minimize friction. I also find interesting the relationship between humanmade and natural materials to show information in a different way. This is why, for example, I decided not to paint the main wood surface that served to hold the pieces on Dilston gallery’s wall.

On the pieces, having two handles is crucial to enhance the desired effect, to allow the audience to control the piece as well. One handle controls the orientation of one of the two polarizers, the other one controls a layer of HIPS that gives light an extra layer to go through.

I was interested in Macroscopy and the limitations of the naked eye. I use the ability of tools to allow us to see further, taking away the illusion of needing to use an extra device to enhance the way in which we generally are used to seeing. I tried to focus on scale, so I picked the one that allowed me to display the potential layering materials without lenses. So, no needing an “extra material” is a way of disguising enhancement as the polarizing effect is happening in front of you, but you don’t necessarily notice or understand it at first.

Filters, layers and lenses are great material sources to explore further effects. One project I intend to do in the future is growing big crystals that can get polarized, and then build a device big enough that allows us to perceive polarizing variation without the need of a microscope. Liquid crystals are also a material I was interested on and I wanted to work with, however the most efficient ones are sold by milligram, and they are very difficult to find. I wanted to add an interactive experience to chemical reactions, as a way to address forced change.

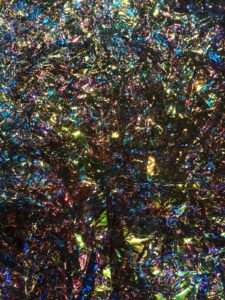

Another project I want to work with on in the future is creating polarized versions of litmus cellophane material, similar to the video experiments I made at the beginning of unit three. I find polarized litmus cellophane fascinating as the color transitions are unexpected, and it gets difficult to tell what the color you are looking at will transitions to.

Another interest I have are invisible colors, which is the assigned name of colors that reflect on a white surface after you see a prolonged amount of that color on a bright screen. Over all, I am interested in the creative potential of color and its possible combinations, the interaction between light and pigment, materiality, extremes (limits) and potential perception.

For a later project I would also like to understand how birefringence can be controlled to a point where the photoelasticity stress scale’s values are actively activated. This could be digitally engineered polarizing the image while it’s being vacuumed, so a new technology would need to be designed.

No object possesses intrinsically the colors we perceive. Perception works as a filter that alters, increases and diminishes. If this is true, reality as we know it is unreliable. Is it possible to grasp all meanings? The open and closed aspects of the piece can help as guidance to start this conversation.

I was looking for the work to allow multiple interactions at the same time. This is what eventually happened at the Dilston Gallery closing party, where I was able to see more than one person interacting with the piece at the same time. This was an exciting experience for me, as the piece also worked, behaviorally, like I expected, which for me was a big step.

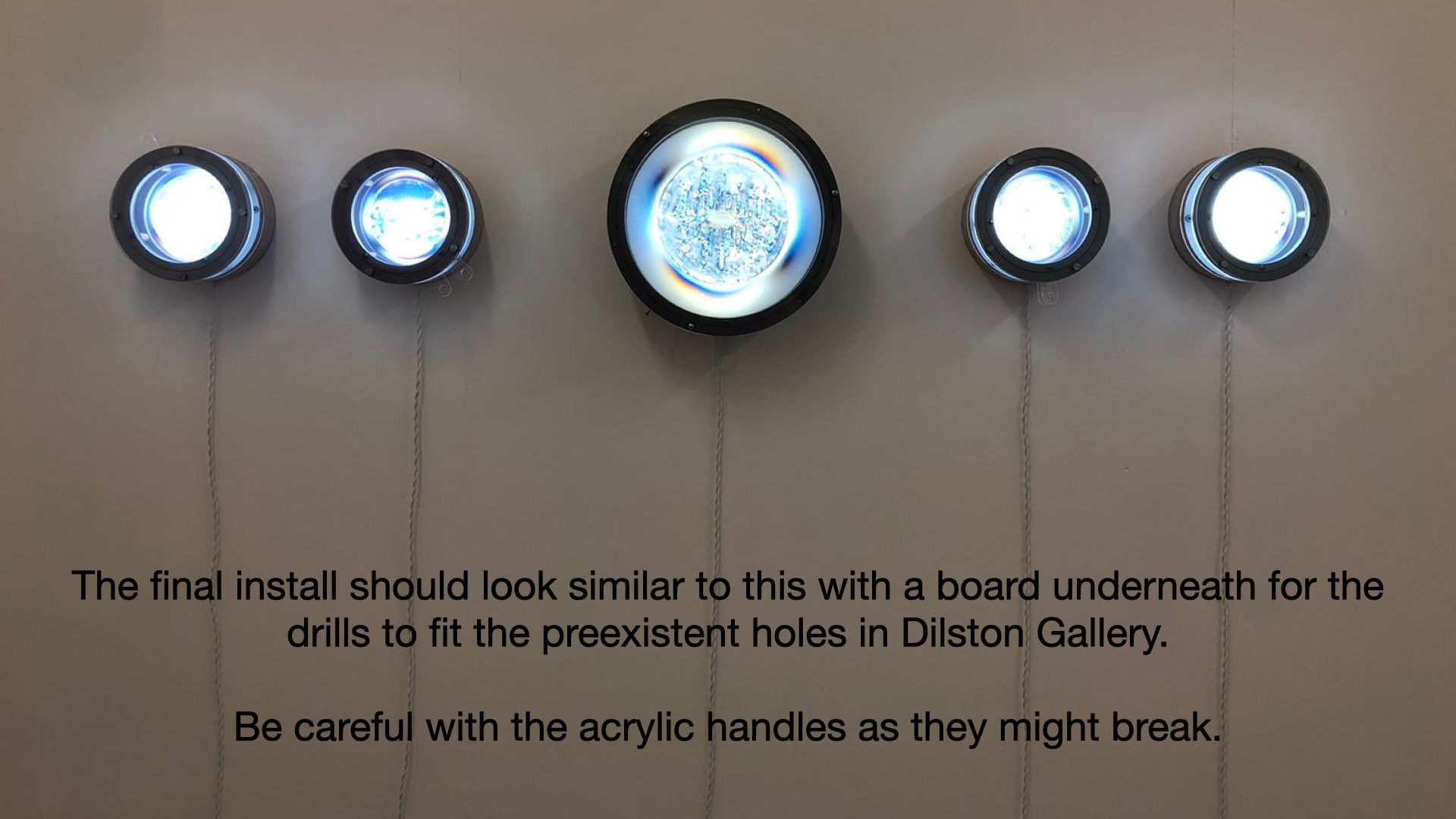

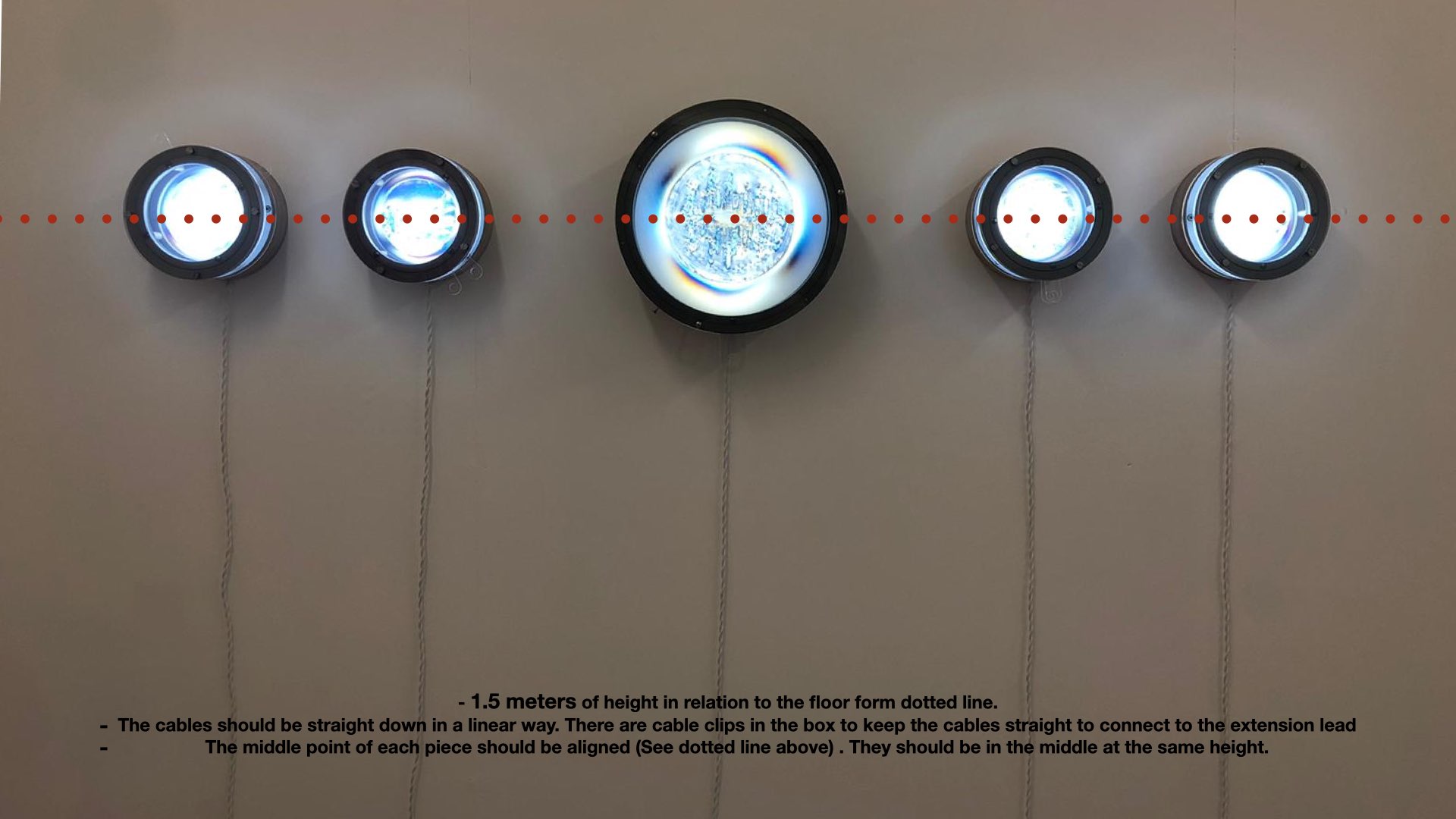

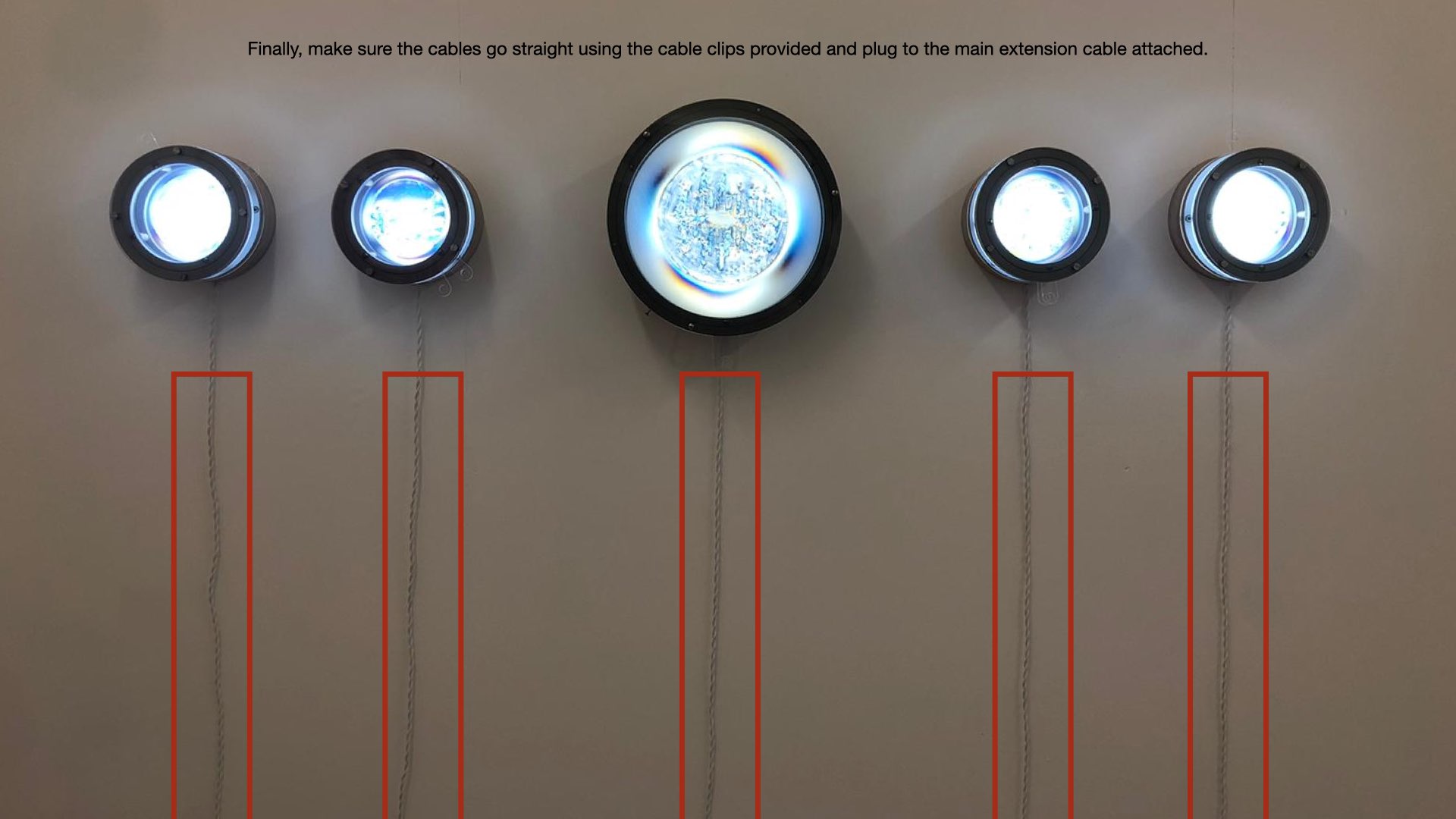

I installed to work on the studio’s wall before to make sure everything worked. Also, to visualize the piece without the wood margin and to inform the assembly instructions. I attached the assembly instructions outside the boxes where the pieces were. This were the most important guidelines for the installation of the piece:

At the top, the plastic HIPS heating process before vacuuming. At the bottom, an example of the vacuum process. This image was vacuumed so many times that a lot of detail and texture was lost. The mold feels thickened due to the constant melting on top when heated and then having pressure applied more than one. The solution for this is to reprint the whole piece, which takes a lot of time. (A 10cm mold takes approximately 6 hours to print).

At the top, an example of an image before and after printing, vacuuming and polarizing. At the bottom, an example of the effect generated by rotating a third filter located at the lens of the camera while rotating the structure. The light gets hidden and blocked when places in opposite direction. According to an article form Scientific American:

“If you take two crossed polarizers (for example, a horizontal and vertical one), no light will get through them. Yet when you insert a third polarizer between the two, oriented diagonally, then some photons make it through.” ( Hillmer, R., & Kwiat, P. , 2017) What you will see, however, are two linear polarizares with a manually changed orientation.

These are the final outcome images for the piece “Unknown realities” for Dilston Gallery. On the top are the original images in order, at the bottom the final polarized results. They were shown in this order. The middle piece is 35cm and the other four are 20cm of diameter.

Finally, I will talk about the Research Festival, the ideas I want to discuss and what I plan to do in order to make them work. The sculptures worked as a final object that went from text to image and then back to text to make sense of it, to unpick the processes and understand how text affects image in a dual relation. For the research festival I intend to focus mainly on text with a similar procedure.

“Without Pretensions” is a novel I started writing in 2020 when the pandemic started. It is an exploration of writing in first person, which compiles texts I have written since when I was young. What I was interested in, is the notion of exploring writing without filtering thoughts, or attributing more importance to some ideas than to others. I was curious about what happened when hierarchy and editing filters are taken off the creative process.

This text, however, is being used to experiment the relationship between text distortion and image as a grounding point. By processing images automatically using online softwares, similar to PhotoMosh, for example, every possible version will generate a completely new image where the only tracking point is the original image one keeps. I am interested, however, in maintaining certain images as a reference point of what doesn’t change. This way, the images serve as anchors.

I was intrigued by processing text back and forth with different AI translation programs and by how this could change the meaning of the input. The only way to recover it is by revising the original text. However, automated mistakes are interesting as they might reveal underlying discourses that reveal the text as a mediator between conversations amongst these different programs.

In “Systems of representation”, Stuart Hall mentions:

“To belong to a culture is to belong to roughly the same conceptual and linguistic universe, to know how concepts and ideas translate into different languages, and how language can be interpreted to refer to or reference the world. To share these things is to see the world from within the same conceptual map and to make sense of it through the same language systems” (Hall, 2013)

So, text distorting, can become cultural distortion as well. AI mimics human properties to reduce error as much as possible, and make the translation as unnoticeable as possible, so the generation of new accidental meanings is fascinating as it demonstrates an external participation, or a cultural overlay.

Helen Marten presents this conceptual segmentation to dissect an artwork in Drunk Brown House. The concepts are: “Diagram, Material, Quality, Action and Grammar”. (Marten, 2016). I believe the relationship between these concepts are key to understanding the different, often unnoticed, aspects of publishing.

The information display will need a medium that will deteriorate slowly through interaction. The concept of action in this context is crucial, as there is a back-and-forth conversation between myself, at first, then interpreted by AI. I intend to share the process to generate distorted content, which is what the audience will ultimately have access to by having four seemingly identical books.

The publication will include a small number of images that will function as the constant (grammar). I thought about distorting these images, so they would get more diffused as the text translation got distorted, however, I think it is interesting to provide a control book for the audience to experience the contrast between the shifting and the control, or using Deleuze’s words, repetition and difference.

Bibliography for this section:

Dall·e mini by Craiyon.com on hugging face. DALL·E mini by craiyon.com on Hugging Face. (n.d.). Retrieved November 4, 2022, from https://huggingface.co/spaces/dalle-mini/dalle-mini

Hall, S. (2013). Representation: Cultural representations and signifying practices. Sage.

Hillmer, R., & Kwiat, P. (2007, April 16). Answer to the three-polarizer puzzle featured in The print edition. Scientific American. Retrieved November 12, 2022, from https://www.scientificamerican.com/article/quantum-eraser-answer-to-three-polarizer-puzzle/#:~:text=If%20you%20take%20two%20crossed,some%20photons%20make%20it%20through.

Marten, H., Jeppesen, T., Myles, E., & Dillon, B. (2016). Drunk brown house. Serpentine Galleries.

Zyro. (2019). Ai Image Upscaler Online – Free Image enhancer. Zyro. Retrieved November 9, 2022, from https://zyro.com/tools/image-upscaler

These experimentations with cellophane allow me to continue exploring the effect that light and polarization have against different surfaces to understand the reason behind the specific changes from one color to another.

Finally, here is a Gallery of final Images taken from the show at Wilson Road and Dilston Gallery: